Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Subscribe to our daily and weekly newsletters for the latest updates and content from the industry’s leading AI site. learn more

Chinese AI developer DeepSeek, known as a leading AI developer with advanced open source technologies, today released a new flagship model: DeepSeek-V3.

Available via Hugging Face under the company’s license agreement, the new model comes with 671B parts but uses an integrated architecture and experts to activate only selected parts, to be able to perform the assigned tasks accurately and efficiently. According to benchmarks shared by DeepSeek, the offering is already on top of the charts, leading the way, including. Meta’s Llama 3.1-405Band closely compare the performance of closed-loop models from Anthropic and OpenAI.

This release marks another major development that closes the gap between closed and open AI. Finally, DeepSeek, which started as an offshoot of a Chinese quantitative hedge fund. High-Flyer Capital Managementthey hope that these developments will pave the way for Artificial General Intelligence (AGI), where models will have the ability to understand or learn any intellectual task possible.

Like its predecessor DeepSeek-V2, the new, larger version uses the same circular architecture. Multi-head latent attention (MLA) and DeepSeekMoE. This method ensures that it maintains good training and guidance – with unique and shared “experts” (individually, small neural networks within the main model) introducing 37B parts from 671B for each signal.

While the basic architecture ensures the powerful performance of DeepSeek-V3, the company has also released two new features to further push the bar.

The first is a method of helping to reduce the load without loss. This monitors and adjusts professional assets for efficient use without disrupting overall performance. The second is multi-signal prediction (MTP), which allows the model to predict many future signs simultaneously. This innovation not only increases the learning ability but allows the model to perform three times faster, generating 60 signals per minute.

“During the training period, we trained DeepSeek-V3 on 14.8T high-quality and diverse tokens… technical paper detailing the new model. “In the first stage, the length of the story is expanded to 32K, and in the second stage, it is expanded to 128K. Following this, we performed post-learning, including Supervised Fine-Tuning (SFT) and Reinforcement Learning (RL) on the basis of DeepSeek-V3, to match the data People like to open up what they can. At the post-education stage, we distill creativity from DeepSeekR1 series of modelsand in the meantime carefully maintain a balance between accuracy of model and length of generations.”

In particular, during the training process, DeepSeek used several optimization and algorithmic algorithms, including the FP8 hybrid training method and the DualPipe pipeline matching algorithm, to reduce the cost of the process.

In total, it claims to have completed all DeepSeek-V3 training in approximately 2788K H800 GPU hours, or approximately $5.57 million, based on a rental price of $2 per GPU hour. This is far less than the hundreds of millions of dollars spent on teaching major languages.

Llama-3.1, for example, is said to have been trained with more than $500 million.

Despite the economic studies, DeepSeek-V3 has emerged as the most powerful open source model in the market.

The company ran several benchmarks to compare the AI’s performance and found that it outperformed open-source models, including Llama-3.1-405B and Qwen 2.5-72B. It even surpasses closed sources GPT-4o on most benchmarks, except for English SimpleQA and FRAMES – where the OpenAI version was ahead with scores of 38.2 and 80.5 (vs 24.9 and 73.3), respectively.

In particular, the performance of DeepSeek-V3 stood out in the Chinese benchmarks and mathematics, outperforming all its peers. On the Math-500 test, she scored a 90.2, with Qwen scoring the next best 80.

The only model that was able to challenge DeepSeek-V3 was Anthropic’s Claude 3.5 Sonnetbest practices are MMLU-Pro, IF-Eval, GPQA-Diamond, SWE Verified and Aider-Edit.

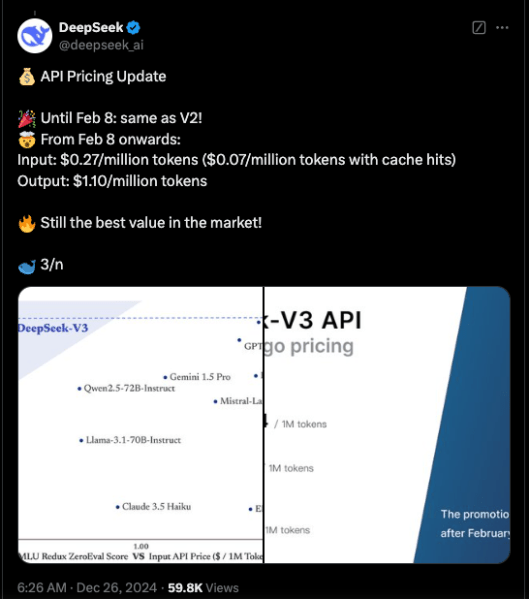

https://twitter.com/deepseek_ai/status/1872242657348710721

This project shows that open source is closing in on closed versions, promising similar functionality for different projects. The development of such systems is very good for the industry as it can eliminate the chance of a dominant AI player to dominate the game. It also gives businesses a number of options to choose from and work with to improve their stacks.

Currently, the DeepSeek-V3 code is available via GitHub under the MIT license, while the model is provided under the license of the company. Companies can also test a new brand through DeepSeek ChatChatGPT as a platform, and access to the API for commercial use. DeepSeek offers an API on same price as DeepSeek-V2 until February 8. After that, it will charge $0.27/million input tokens ($0.07/million tokens and cache hits) and $1.10/million output tokens.